Adding Integration Tests to a Model Build

Validating Serving Artifact

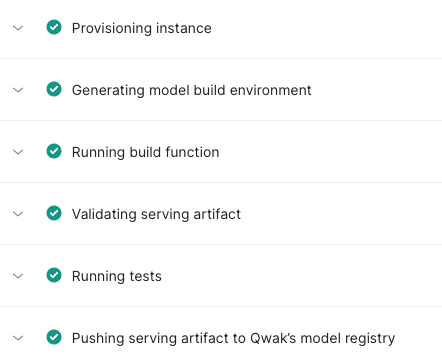

After executing the build() function in the model-building process, Qwak initiates a critical step - Validating Serving Artifact. This involves starting a Docker container encapsulating the newly built model. This container serves two primary purposes:

- Initialization: The

initialize_model()function is executed to ensure the model serving is correctly set up and started within the container. - Dummy Request Handling: The container, with its embedded webserver, is tested with a dummy request. This step confirms the container's operational status and its ability to handle incoming requests successfully.

Qwak Models Build Page

Running Integration Tests

The same container used in the validation phase is also operational in the Running Tests phase. This phase is crucial for treating the container as a live model and enables local endpoint predictions. This setup allows for more robust testing, ensuring that the model is fully functional and deploy-ready before it enters shadow or production environments.

To facilitate this, you can execute test predictions using the following syntax:

from qwak_inference.realtime_client.client import InferenceOutputFormat

def test(real_time_client):

result = real_time_client.predict(feature_vector, InferenceOutputFormat.PANDAS)

Here, real_time_client is configured with the local endpoint, allowing for efficient and practical test predictions.

This approach offers an excellent opportunity to enhance your testing practices. It ensures early detection of issues and confirms the model's readiness for deployment. This proactive testing strategy aligns well with continuous integration practices and aids in maintaining high-quality standards.

Please place your integration tests under a

itdirectory in thetestsfolder, adhering to the model build directory structure.

Resource requirements

While validating the serving artifact and conducting tests, it's essential to consider the resource requirements of your model. For instance, if you're building a model on a small instance with the --gpu-compatible flag for eventual deployment on a GPU-based instance, you might face resource constraints. The small instance may not provide adequate resources to initiate the model locally for inference testing. This step is particularly beneficial when the deployment hardware requirements are similar to those used during the building process.

Additional directories in tests

To access files added with the --dependency-required_folders parameter in tests, use the qwak_tests_additional_dependencies fixture. For example:

def test_print_content_from_variable(qwak_tests_additional_dependencies):

print(qwak_tests_additional_dependencies)

directories = os.listdir(qwak_tests_additional_dependencies)

print(directories)

All --dependency-required-folders are inside the directory which path is passed as the qwak_tests_additional_dependencies parameter.

Updated 3 months ago